Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Näissä muutamissa kappaleissa annoin vain yhden tavan, jolla turboahdin tekoälykoulutusta ja päättelyä autotallissa käyttämällä palaneita ja hylättyjä H100-grafiikkasuorittimia, jotka suuret yritykset heittivät pois.

Se on miljardien ellei biljoonien dollarien arvoinen nokkelalle yritykselle.

Polku on nyt avoimen lähdekoodin...

30.8.2025

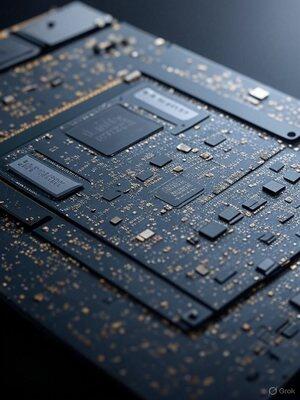

Turbocharge Nvidia AI GPUs: Simple Tricks for Blazing-Fast Performance

In my garage I have to make what little non-VC investor research and development, work, I have pennies and get higher speed than companies with billions. This constraint causes me to find ways to squeeze more out of less. I do many things most can’t think of. Here is an example of just one of 100s.

GPUs are powerhouses, packed with tons of processing units ready to crunch numbers. I discovered But often, they’re not fully utilized, leading to sluggish performance.

What did I discover? Smart optimizations that keep those units buzzing, slashing AI render times and delivering massive speed boosts.

First, spot the bottlenecks.

I use profiling tools like Nvidia’s Nsight to see what’s holding things back, whether it’s memory waits, or other things. Once identified, I dive in and tweak code to pack more work into each thread.

Simple changes like unrolling loops or compressing data can hide delays and ramp up throughput, giving instant speed jumps.

High usage can sometimes cause cache chaos—fix it by smartly reducing thread counts with dummy code or memory tweaks, freeing up resources for parallel tasks.

The real game-changer? Async compute. Run multiple tasks side-by-side, filling idle gaps and overlapping heavy loads. Pair a memory-hungry tasks yield GPU multitasking—potentially halving times and supercharging efficiency.

These tweaks transform underused GPUs into speed demons. It is not unlike how transformed IBM PC/ATs personal computers to run up to 100MHz in 1986 when they came out of the factory at 8MHz.

I’ll write more details about this, but if large AI companies used my op code level Nvidia GPU optimization, they would likely reach AGI, rather quickly.

When you know hardware and software at an almost atomic level, you can remake the first principles.

9,65K

Johtavat

Rankkaus

Suosikit