Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Interesting. Why is Fukushima not as celebrated as Western AI researchers?

3.8. klo 22.05

Who invented convolutional neural networks (CNNs)?

1969: Fukushima had CNN-relevant ReLUs [2].

1979: Fukushima had the basic CNN architecture with convolution layers and downsampling layers [1]. Compute was 100 x more costly than in 1989, and a billion x more costly than today.

1987: Waibel applied Linnainmaa's 1970 backpropagation [3] to weight-sharing TDNNs with 1-dimensional convolutions [4].

1988: Wei Zhang et al. applied "modern" backprop-trained 2-dimensional CNNs to character recognition [5].

All of the above was published in Japan 1979-1988.

1989: LeCun et al. applied CNNs again to character recognition (zip codes) [6,10].

1990-93: Fukushima’s downsampling based on spatial averaging [1] was replaced by max-pooling for 1-D TDNNs (Yamaguchi et al.) [7] and 2-D CNNs (Weng et al.) [8].

2011: Much later, my team with Dan Ciresan made max-pooling CNNs really fast on NVIDIA GPUs. In 2011, DanNet achieved the first superhuman pattern recognition result [9]. For a while, it enjoyed a monopoly: from May 2011 to Sept 2012, DanNet won every image recognition challenge it entered, 4 of them in a row. Admittedly, however, this was mostly about engineering & scaling up the basic insights from the previous millennium, profiting from much faster hardware.

Some "AI experts" claim that "making CNNs work" (e.g., [5,6,9]) was as important as inventing them. But "making them work" largely depended on whether your lab was rich enough to buy the latest computers required to scale up the original work. It's the same as today. Basic research vs engineering/development - the R vs the D in R&D.

REFERENCES

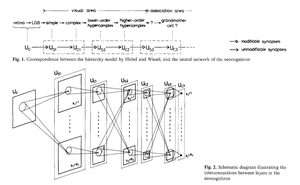

[1] K. Fukushima (1979). Neural network model for a mechanism of pattern recognition unaffected by shift in position — Neocognitron. Trans. IECE, vol. J62-A, no. 10, pp. 658-665, 1979.

[2] K. Fukushima (1969). Visual feature extraction by a multilayered network of analog threshold elements. IEEE Transactions on Systems Science and Cybernetics. 5 (4): 322-333. This work introduced rectified linear units (ReLUs), now used in many CNNs.

[3] S. Linnainmaa (1970). Master's Thesis, Univ. Helsinki, 1970. The first publication on "modern" backpropagation, also known as the reverse mode of automatic differentiation. (See Schmidhuber's well-known backpropagation overview: "Who Invented Backpropagation?")

[4] A. Waibel. Phoneme Recognition Using Time-Delay Neural Networks. Meeting of IEICE, Tokyo, Japan, 1987. Backpropagation for a weight-sharing TDNN with 1-dimensional convolutions.

[5] W. Zhang, J. Tanida, K. Itoh, Y. Ichioka. Shift-invariant pattern recognition neural network and its optical architecture. Proc. Annual Conference of the Japan Society of Applied Physics, 1988. First backpropagation-trained 2-dimensional CNN, with applications to English character recognition.

[6] Y. LeCun, B. Boser, J. S. Denker, D. Henderson, R. E. Howard, W. Hubbard, L. D. Jackel: Backpropagation Applied to Handwritten Zip Code Recognition, Neural Computation, 1(4):541-551, 1989. See also Sec. 3 of [10].

[7] K. Yamaguchi, K. Sakamoto, A. Kenji, T. Akabane, Y. Fujimoto. A Neural Network for Speaker-Independent Isolated Word Recognition. First International Conference on Spoken Language Processing (ICSLP 90), Kobe, Japan, Nov 1990. A 1-dimensional convolutional TDNN using Max-Pooling instead of Fukushima's Spatial Averaging [1].

[8] Weng, J., Ahuja, N., and Huang, T. S. (1993). Learning recognition and segmentation of 3-D objects from 2-D images. Proc. 4th Intl. Conf. Computer Vision, Berlin, pp. 121-128. A 2-dimensional CNN whose downsampling layers use Max-Pooling (which has become very popular) instead of Fukushima's Spatial Averaging [1].

[9] In 2011, the fast and deep GPU-based CNN called DanNet (7+ layers) achieved the first superhuman performance in a computer vision contest. See overview: "2011: DanNet triggers deep CNN revolution."

[10] How 3 Turing awardees republished key methods and ideas whose creators they failed to credit. Technical Report IDSIA-23-23, Swiss AI Lab IDSIA, 14 Dec 2023. See also the YouTube video for the Bower Award Ceremony 2021: J. Schmidhuber lauds Kunihiko Fukushima.

26,12K

Johtavat

Rankkaus

Suosikit