Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

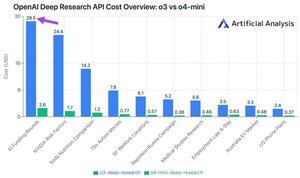

OpenAI's new Deep Research API costs up to ~$30 per API call! These new Deep Research API endpoints might just be the new fastest way to spend money

Across our 10 deep research test queries, we spent $100 on o3 and $9.18 on o4-mini. How do the costs get so big? High prices and millions of tokens.

These endpoints are versions of o3 and o4-mini that have been RL’d for deep research tasks. Availability via API allows them to be used with both OpenAI’s web search tool and custom data sources via remote MCP servers.

o4-mini-deep-research pricing is 5x lower than o3-deep-research pricing. In our test queries, o4-mini also seems to use fewer tokens - it is came in over 10x cheaper in total across our 10 test queries.

Pricing:

➤ o3-deep-research is priced at $10 /M input ($2.50 cached input), $40 /M output

➤ o4-mini-deep-research is priced at $2 /M input ($0.5 cached input), $8 /M output

These endpoints are both substantially more expensive than OpenAI’s standard o3 and o4-mini endpoints - those are at:

➤ o3: $2 /M ($0.5 cached) input, $8 /M output for o3

➤ o4-mini: $1.1 /M (0.275 cached) input, $4.4 /M output

37,1K

Johtavat

Rankkaus

Suosikit